Live Performance During COVID Times (AR)

I was approached by The Museum of Science in Boston to give a presentation about emerging technologies for live performance. I asked if they would let me blur the lines of a TEDx and a One Man Show, offering a real-time demonstration of virtual tools using Shakespeare monologues. They were incredibly supportive and trusting to allow me to write, develop, stage, and perform from my living room over Zoom for tech rehearsal and their YouTube channel for the actual show.

In the spirt of “E3 meets Edinburgh,” I began to craft an evening of theater, cheekily using a popular monologue from Tom Stoppard’s Rosencrantz and Guildenstern Are Dead to challenge the new normal of being “stuck in a box.” Breaking down each line of the speech and each piece of technology, I examined my own journey through scrappy storytelling, COVID, and exploring new tools to reach new audiences.

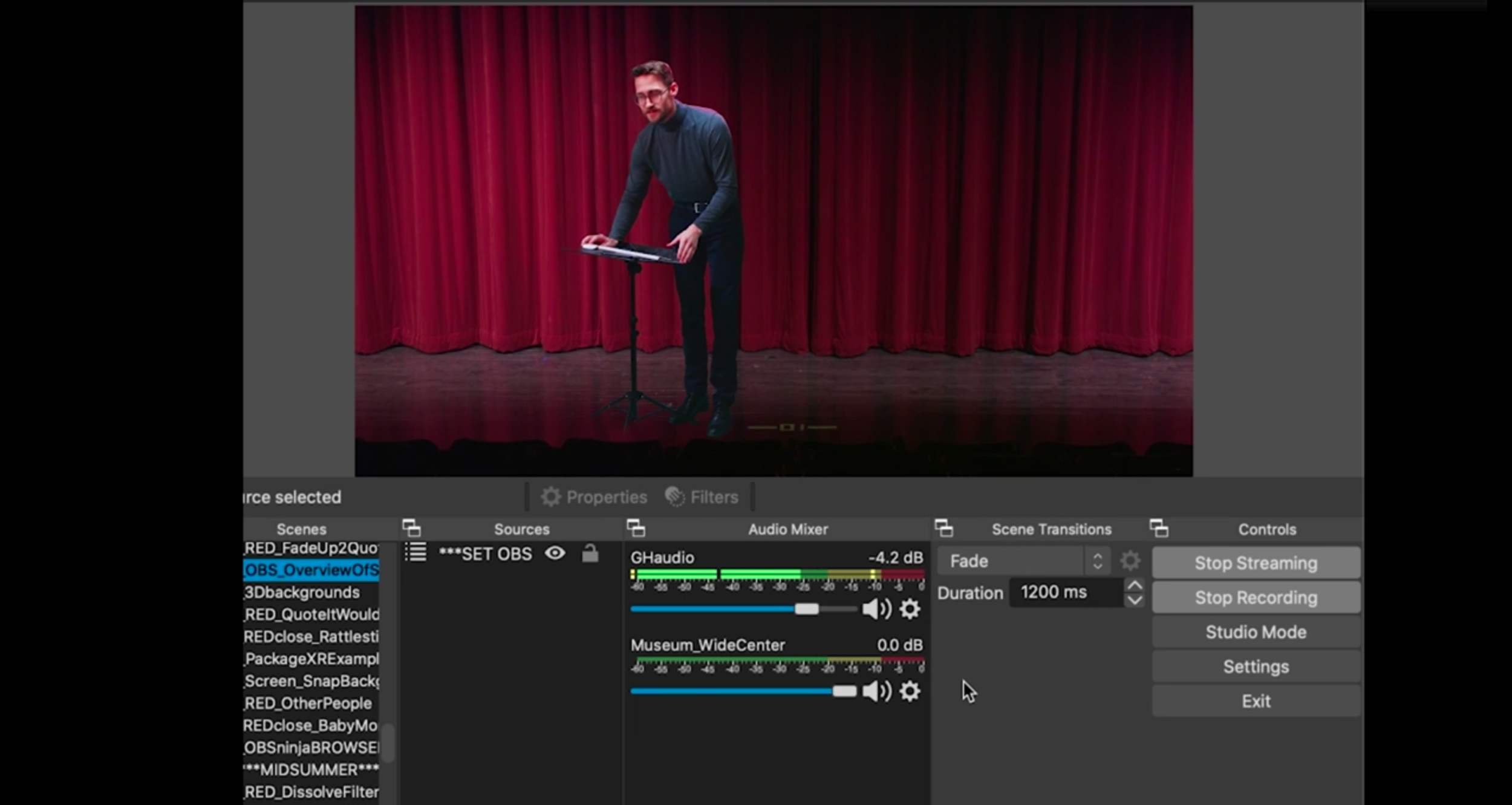

Building on my Zoom Theater Tutorial, I wanted to stress test my use of Open Broadcaster Software (OBS) to personally design and control 70+ cues without a stage manager or director. I used the StreamDeck app to trigger the cues live using my smart phone and wore a single earbud to hear my audio cues and scene partners.

After I mapped out the show, I began rehearsing on a home-made green screen stage using a giant tablecloth and mint-colored gym mats. (I know this looks crude, but it was important to be able to tear down the set up during the show as part of the reveal that I was in my living room). I rehearsed and blocked until I could run the entire show in a single take. Then, I used editing software to remove the green and play around with backgrounds, filters, and perspective. This allowed me to reverse engineer what cues I needed to build and how I could trigger them live, during the show.

The Museum of Science provided me with four “plate shots” of their own empty stage at their facility. I choreographed turns in my performance so that when I cut between the backgrounds, it would appear I was being filmed by multiple cameras (I only had one camera).

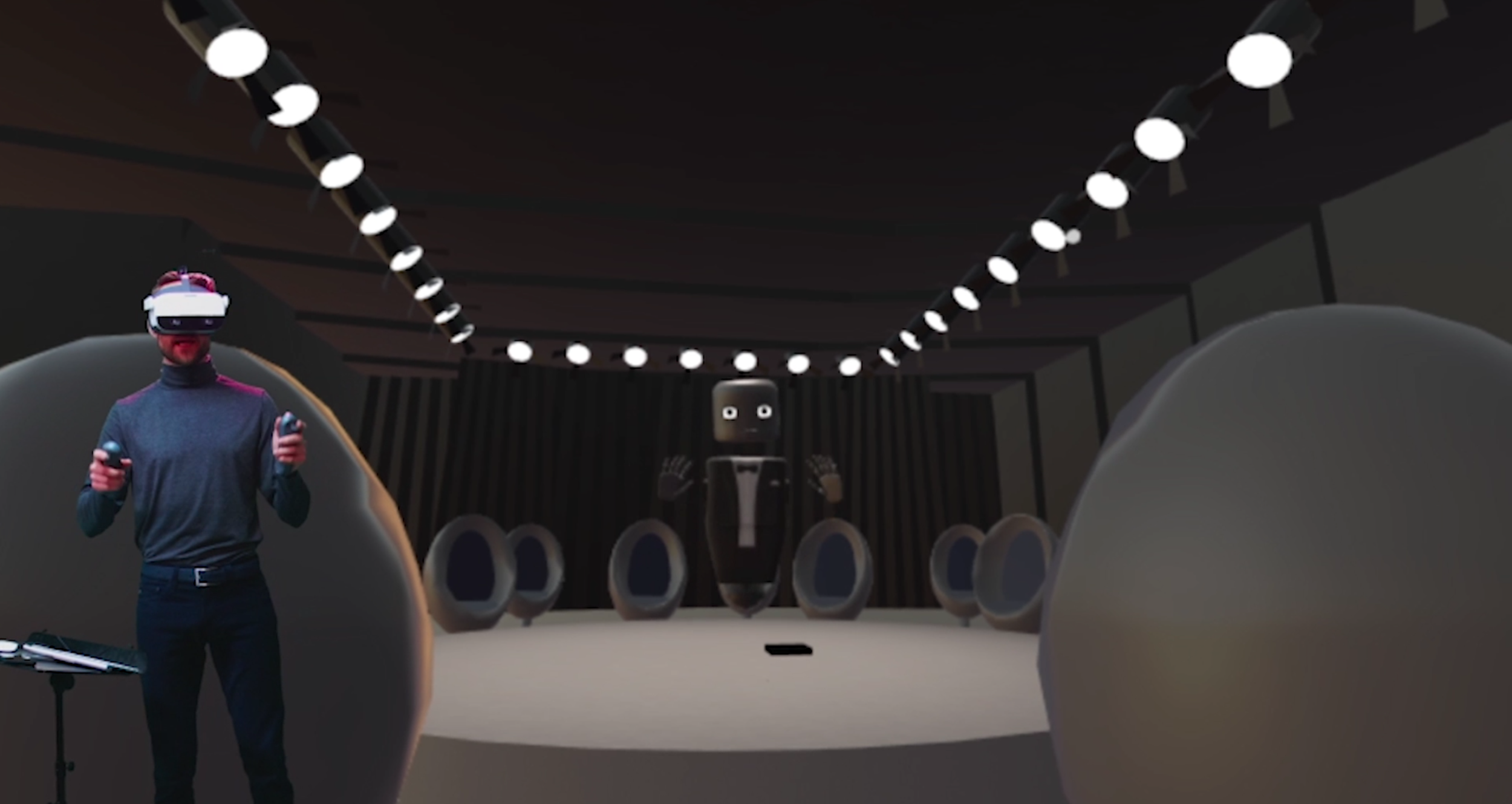

I hired a 3D projection artist, Amir Cohen, to create renders that I could composite behind or in front of my live video feed to simulate moving scenery and lighting. Amir was advertising these elements for “virtual DJ’s” to put themselves inside a virtual club during the lockdown. I suggested taking his work further using “alpha layers” with the ProRes4444 video format, which will allow playback of a transparent video in OBS. This workflow was rooted in the principles of basic AR or Augmented Reality - virtual layers or filters are placed on top of my video to augment my appearance - they cannot detect me - but I can choreograph my movements, like a dance, to make it appear like I am manipulating or responding to these layers.

For the meat of the show, I wanted to demonstrate 5 real-time virtual technologies on stage:

BACKGROUND REPLACEMENT: The entire presentation used open source “muxing” techniques, often utilized by Twitch streamers where gamers put themselves “inside” their gameplay footage and cycle through professional looking transitions and chat overlays. The entire evening was meant to stair step the viewer through the complexity of technology and my adoption of the tools, beginning with simple background removal, that has become common in most virtual performance and meetings. I choose Hamlet’s most popular speech and transcribed the words behind me to demonstrate how captions could become part of the scenery.

LIVE-COMPOSITING: Pulling the curtain back, I wanted to reveal what I was doing behind the scenes with the audience - sharing my screen and demonstrating how I could trigger pre-built cues in real time. The Prologue of Henry V infamously teases the limitations of a bare stage and I relished in how these words captured the theater communities early criticisms of virtual solutions.

SCENE PARTNERS: The majority of my early experiments repurposed security cameras and baby monitor apps to host live feeds of my fellow performers. As the pandemic forced expansions to NDI and networked device capability, I wanted to free tools that could allow anyone to share the stage with me. I couldn’t resist the chance to play Bottom from A Midsummer Night’s Dream and host the museum to staff to play my fairies while I used a snapchat filter for my donkey head.

VOLUMETRIC & MOTION CAPTURE: Serendipitously, I had spent the months prior to the pandemic in post production for my own adaptation of Macbeth, using an xbox to capture skeletal and facial data from my actors to “parent” to 3D avatars. It was a thrill to finally get to breath life to the original text, represented as a point-cloud “shadow” on stage.

VIRTUAL REALITY: All the world really is a stage these days and Jaques helped me step through the “ages of man” as different avatars, easily changing costume and character with the click of a button.

The evening ended with Prospero from The Tempest, setting down my “spells” and revealing everything behind the curtain, literally. The virtual effects melted away, and I slowly took apart my green screen, turned off the lights, and allowed the audience to listen the words of Rosencrantz one final time as we faded out to blackness.

I took a bow and the museum began a live Q&A from audience questions. The final question actually resulted in starting the show over again, and going through the opening section cue-by-cue to reveal each step of how I designed and built the show, and how I choreographed my movements to “sell” the transitions. This inspired a livestream where I broke down every single cue in the show and answered audience questions.

I had never imagined I would perform a solo show, especially not from my living room using a bunch of plugins and tricks I learned on YouTube. The greatest compliment came from my family, who didn’t realize the show was live until a cue mis-fired ten minutes into the show.

UPDATE: I was invited to perform a cutting of the show “at” Baltic House International Theatre Festival “in” St. Petersburg, Russia. (Watch Live Presentation)